Visionary Digital Evolution Strategist

Rooted in Formula 1 excellence, with over 30 years in IT starting as a child in the 1980s, …

Hey there, fellow digital warriors! ⚔️

Before we jump into today’s AI code generator buzz, let’s take a quick stroll down memory lane to Episode 23. Remember that jaw-dropping tale about The Deadly Price of Cheap Code? Yep, we’re talking about Boeing’s tragedy and the looming AI crisis. (ICYMI: check it out here) 🔥 It was a raw, no-holds-barred look at how cutting corners in software engineering leads to disasters—no exceptions. This wasn’t just a coding error; it was a full-blown catastrophe with lives lost 😞.

And here’s the kicker: If we keep pouring trillions into AI while laying off skilled engineers like we’re chopping down trees in a forest, the next disaster could make Boeing look like a fender bender. And it might not just hit “some company.” It could be your company, your family, or even us, staring down the consequences of this AI free-for-all. Software is everywhere, and if we keep letting AI churn out subpar, untested code, we’re only setting new records for failure. Buckle up, because what you’re about to read will make you rethink everything. 🚨

Let’s be real: software engineers aren’t just developers with fancier job titles and bigger paychecks. Nope, it’s all about the discipline. We’re talking testing, CI/CD pipelines, and that unwavering commitment to clean code and solid architecture. And it doesn’t stop there—software engineers are master communicators, seamlessly switching between tech-speak and business lingo in the blink of an eye, all while negotiating with users and stakeholders to make sure what’s built actually works.

Engineers build systems that last, that don’t fall apart the second you look away. Now, developers? Sure, they can crank out some hot code, but when it comes to long-term quality, architecture, and teamwork? That’s where things go sideways. Without engineering discipline, you end up with sloppy code—no tests, no validation, just a ticking time bomb. And the moment things go wrong? Cue the blame game. Everyone’s throwing shade, and suddenly it’s like the ninja ambush out here, not a collaborative team effort. 😬

And here’s the brutal truth: companies know this. That’s why developers get just enough pay to keep them around, barely nudging wages past inflation. Engineers, though? They’re the ones who get called in to clean up the mess after the explosion. 💥

###The AI Code Generator Hyope: Utopia or the Next Big Thing?

Now let’s switch gears for a second. AI code generators. Everyone’s freaking out about them, right? We’ve got GitHub Copilot, Cursor.ai, Codeium.com, Devin.ai , and even JetBrains AI jumping into the ring like it’s a WWE match for your code. 🤖💻

But here’s the thing:

I’m skeptical 🤨. Will these shiny new toys really replace software engineers?

Spoiler alert:

🙈 Not anytime soon.

They’re fine for quick fixes, cranking out boilerplate code, and maybe saving you when you’re in a bind. But can they architect entire systems or think deeply about security, efficiency, or long-term stability? Not unless you’re ready to sign up for a dumpster 🔥 fire of technical debt. Here’s the deal though—and here’s where things get interesting. After running these tools through a few tests, I’ve realized something. If you treat these AI tools like a virtual co-pilot (pun intended) instead of a lead navigator, they can actually add some value. Think of them as that ultra-fast sidekick who can dig up options, check documentation, and handle the busy work—all at lightning speed. In that role? It’s a nice touch to add to a pair/mob session. But handing over the steering wheel to AI? Forget about it 🚫.

To really drive home why I’m so skeptical 🤨, let me introduce a third-party reviewer who doesn’t pull any punches: Carl Brown. If you haven’t heard of Carl and his YouTube channel Internet of Bugs, do yourself a favor and check it out. His videos are where code meets comedy, and trust me, you’ll be laughing and cringing at the same time. Carl’s latest video is absolute gold—he pits several AI code generators against each other in a head-to-head showdown. 💥

What’s genius about Carl’s approach is the simplicity. He took the same exact kata from Codecrafters.io—building an HTTP server—and tossed it at all the AI code generators to see what they could do. No special treatment, no variations. Just the same problem, the same tests, and Carl? He just sat back and let the bots duke it out.

The results? Cursor.ai came out on top. 🏆 Yeah, it won the battle, but here’s the twist:

while it generated “better” code than the rest, it wasn’t exactly good code 😞.

Sure, Cursor spit out some decent stuff (we’ll dive into that in a bit), but guess what was completely missing across the board?

That’s right—every single AI-generated code was missing one crucial thing: TESTS. 🤯

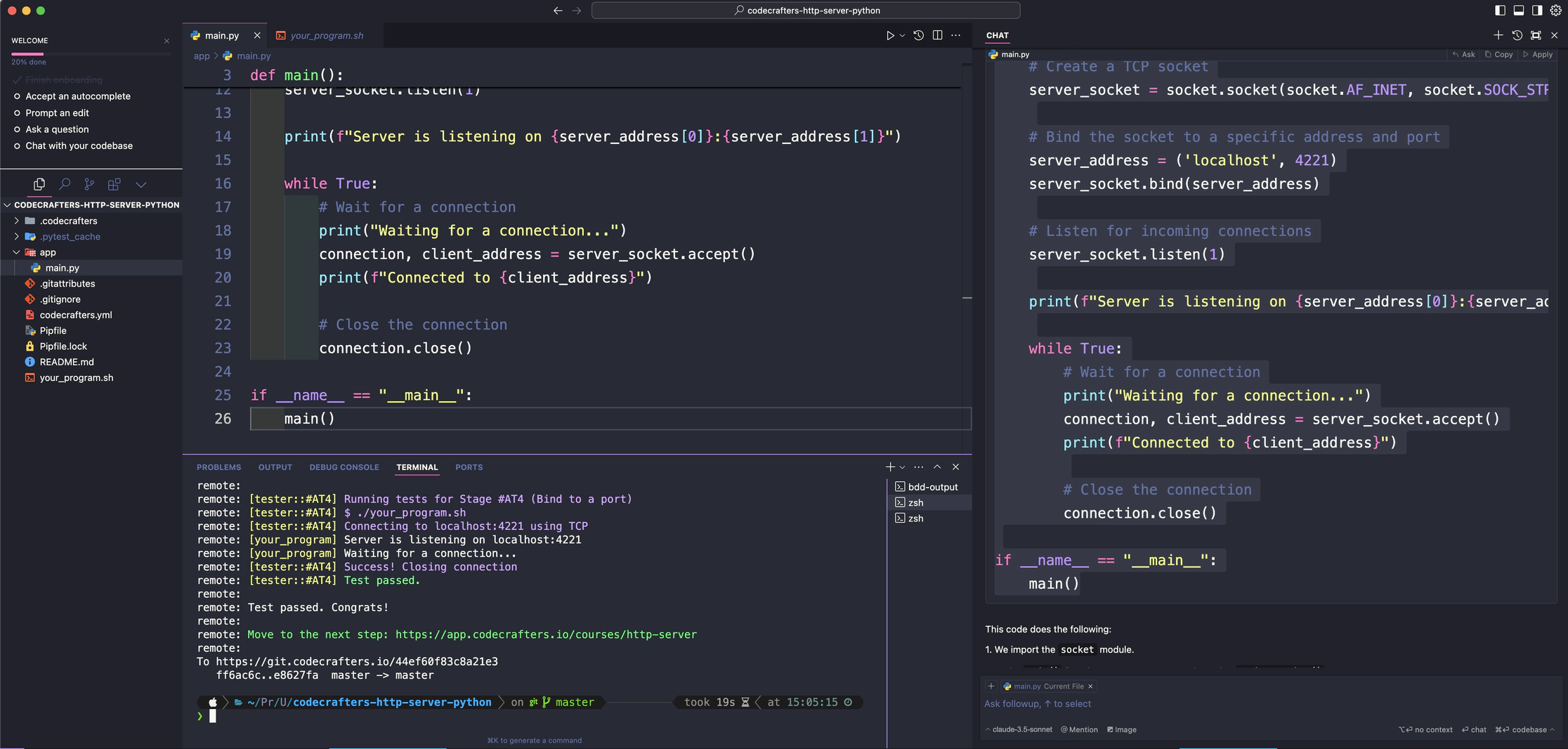

Alright, here’s the “wow, are you serious?” moment. Take a look at Cursor.ai’s best attempt to create an HTTP server:

Looks pretty slick, right? At first glance, not a bad start. But wait—where are the tests? And I’m not talking about the basic checks Codecrafters.io uses to confirm the task completion. I’m talking about real tests—unit tests, integration tests, anything to validate that the code won’t blow up in production. Guess what?

There’s nothing. 😱

We’re talking zero test coverage, no safety net to catch bugs before this code hits production. And without tests, who knows what’ll happen when this server needs to scale? For all we know, it could crash and burn the moment traffic spikes, and there’d be no way to predict it until it’s too late. 🔥

This is the harsh reality of relying too much on AI-generated code. Sure, it’s fast. But fast doesn’t mean good. The code comes out with:

What we get is a pile of unmaintainable spaghetti code that’s doomed from day one. It’s the perfect recipe for a technical debt nightmare—and guess what? You’ll be paying the price when it’s time to refactor and untangle the mess.

So, is Cursor the “best” right now? Maybe… if all you need is a starting point. But don’t fool yourself into thinking this is the future of quality software. Human brains are still necessary—software engineers who understand the problem, design incrementally, and write tests that certify the code behaves as expected. When the time comes to refactor and simplify that inevitable complexity (essential and accidental), those tests will be your lifeline. They’ll ensure that your code isn’t just working but clean, understandable, and maintainable for the next person who touches it. Because let’s be honest—AI-generated code is a cognitive overload waiting to happen.

Stay tuned for the next episode where, now that you’ve seen why code without tests is a ticking time bomb for any forward-thinking CXO, we’ll dive deeper into how your developers can evolve into true software engineers. We’ll explore the journey to becoming skilled crafters, built on over a decade of experimentation and refinement in the SW Craftsmanship Dojo®. Until then, keep your code clean 🧼 and your tests green ✅!

To stay in the loop with all our updates, be sure to subscribe to our newsletter 📩 and podcast channels 🎧:

🎥 YouTube

📻 Spotify

Visionary Digital Evolution Strategist

Rooted in Formula 1 excellence, with over 30 years in IT starting as a child in the 1980s, …