Hands-On Engineering Lead & Fractional CTO | Technical Coach | Guiding Organizations to Excellence

With over 15 years as a Senior …

With the rise of ChatGPT,

Bard Gemini,

GitHub Copilot,

Devin, and other AI tools1, developers

started to fear that AI tooling would replace them. Even though their

capabilities are indeed impressive, I don’t fear our jobs will go away in 2024.

Let’s start with understanding what we do as software developers.

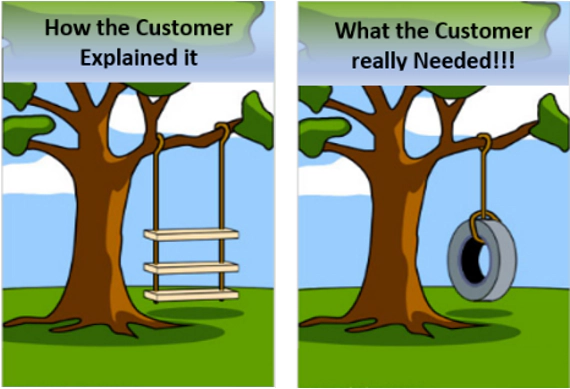

Assuming that due to a miracle, we know exactly what the client needs, there is still one more gotcha: communication and precision.

In most cases, software products are delivered by teams, not one-man-armies. If there is any miscommunication or misunderstanding between any two members of the team, they will have a different understanding of the desired outcome. Not to mention the edge cases and tiny details of the solution that nobody clarified.

If those are not resolved, the product they deliver will be different from what the client needs.

Identifying the problem and its solution needs a deep understanding of human nature and behavior. Currently, AI tools don’t have those. Even most humans lack those abilities - no wonder many organizations struggle with their software delivery.

On top of these, there is a fundamental difference between humans and AI. We are pretty good at understanding vague descriptions. We have decades of life experience that we can utilize to fill the gaps and resolve contradictions in what they ask from us. On the other hand, machines will do exactly what we ask them to do.

There is a simple reason we are so good at working from bad specifications. 99% of the time, in any part of life, we have to work with flawed information. So we had to learn how to deal with them effectively. AI tools are not there (yet). They need a very comprehensive and precise specification.

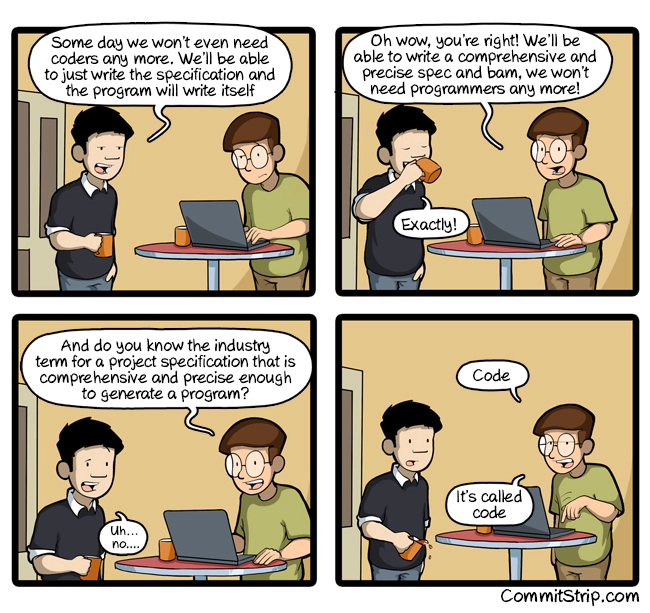

If we want to boil it down, generative AI2 tools that create code are backed by large language models3. In a nutshell, they are trained on enormous amounts of text (code), and based on the context, they predict what could come next. There are multiple possible continuations. Based on their settings, they will go with one of the most likely ones.

This works surprisingly well. But it also means that - unlike humans - AI tools can’t create something completely new. They need existing references in their training data.

It means two things:

Training an AI to use a new technology is pretty easy. We need to show them enough code examples that use that technology. The problem is that we need to create that first. We are back to square one.

Solving a complicated problem can be challenging to humans, too. But we can understand and analyze a new problem, and come up with a solution. AI can only predict a solution, but that’s nothing more than guesswork for new problems. Like when we try to tell a dinosaur’s color from their remains.

Let’s say we have a well-defined problem that’s not challenging and we want to implement it using a mature technology. For example, we need a registration form with plain old HTML and a Node.js + Express backend. That’s familiar ground for AI tools, so we can bravely use them to generate the code. They even create tests4. Everything is working, we delivered it in record time, and the client is satisfied. This seems like a win-win situation. But is it?

What happens if the next day the client asks to integrate the newest and shiniest captcha? We already know that since it’s a new technology, the AI most likely won’t be familiar with it. No problem, it seems a simple task. We are confident we can implement it ourselves in no time.

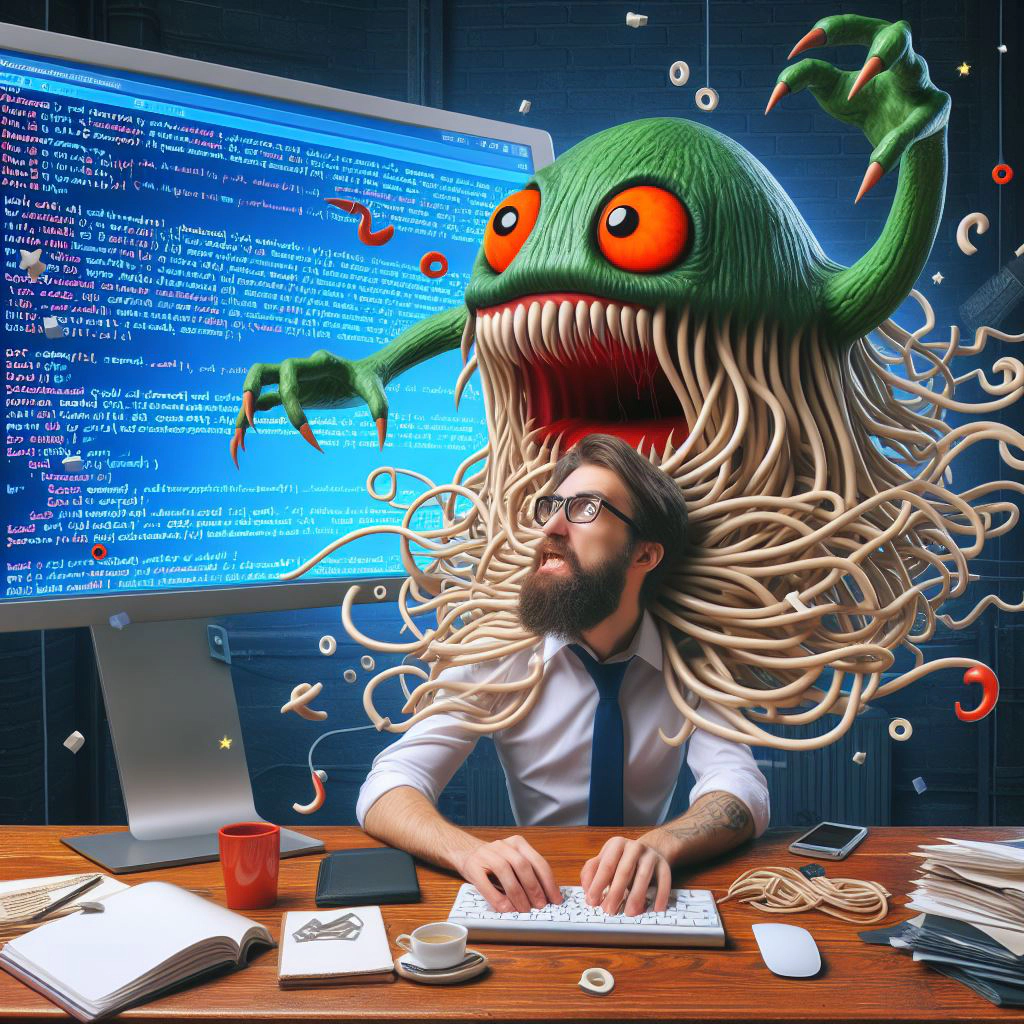

If the generated code is high-quality we indeed don’t have any problems. But what happens if it isn’t? What if it’s the worst spaghetti code we ever faced?

We either try to tame it and make it more maintainable (maybe with the AI tool we already lost confidence in), or trash it and rewrite it from scratch. Either way, it will take too much time compared to the initial solution.

Because of AI limitations, humans will have to understand and maintain the code. If it’s low quality, or even too smart5, we will have a very hard time dealing with it. It’s tricky to ensure a generated code’s quality.

Most of the code is created for and by companies to realize revenue. It’s still an unregulated question that who owns AI-generated content. The prompt writer? The AI tool’s creator? The owner of the training data?

The problem becomes even more interesting tougher if we think about the

code’s behavior and its responsibility. What if the code causes harm? For

example, deletes some important files. Sells stocks and we lose money. Controls

hardware that causes harm to humans. Whose responsibility will that be?

This question is tricky enough with self-driving cars6. It becomes a disaster if we add AI-generated code to the mix7.

With that level of uncertainty, many companies don’t want to jump on the AI train. I can’t blame them.

As we saw, AI tooling has major limitations when it comes to software development. But it’s nothing more than how things stand in April 2024. AI solutions evolve at an incredible speed. No doubt they will become much better not only in the coming years but also in the coming months.

In this post, we mostly talked about limitations. Fortunately, it doesn’t mean that these tools aren’t useful. In a follow-up post, we’ll see how we can and should use them to maximize our productivity. Because it’s already a cliche that AI won’t steal our jobs. Someone who knows how to use AI will.

A non-comprehensive list: AlphaCode, Tabnine, Sourcery, DeepCode AI, WPCode, Ask Codi, Codiga, Jedi, CodeWhisperer, Magic, Sourcery ↩︎

https://en.wikipedia.org/wiki/Generative_artificial_intelligence ↩︎

But can we trust those tests? We’ll talk more about thet in the next post. ↩︎

A great example is the reverse square root function in the Quake engine ↩︎

Maybe it’s time to retrain ourselves as lawyers so we’ll have plenty of work when we’re not needed as developers. ↩︎

Hands-On Engineering Lead & Fractional CTO | Technical Coach | Guiding Organizations to Excellence

With over 15 years as a Senior …